Intelligence from Inactivity: Transforming Idle GPUs into AI Powerhouses

From Text Tokens to Crypto Tokens

Over two decades ago, as a young enthusiast, I embarked on a peculiar quest. I joined millions worldwide on a journey that, despite never leaving our computer desks, sought answers from the farthest corners of the universe. It was the late nineties, and the search for extraterrestrial life was ongoing through various initiatives.

SETI@Home, a program that lets our computers contribute idle CPU time to analyze cosmic radio signals, was our "spaceship." Even though, in the end, my computer, or any other one, didn't discover green aliens, we became part of the largest distributed computing project in the world.

The initial ambition of the Berkley SETI Research Center was to get 50,000 to 100,000 participants. Instead, it has gathered over 5 million contributors. Fast forward to today, I found myself reminiscing about the SETI@Home days and how it had created a foundation that could soon transform the world of artificial intelligence and large language models (LLMs).

The Current State of AI and the Need for Democratization

By the end of last year, around the week ChatGPT was first released, I wrote a piece titled 'Democratizing the Exciting New Wave of AI' where I pondered the potential consequences of AI technology being controlled by a handful of tech giants. The goal was to explore ways of democratizing AI through open-sourcing and decentralization – making it accessible to all and enabling widespread participation in building new models.

Since then, the open-sourcing movement has flourished with several language models, such as Falcon by TII, MPT by Mosaic ML, and the recent Llama 2 by Meta. These models, ranging from 7 billion to 70 billion parameters, have abilities nearing those of GPT 3.5 while being free to use and customize. Despite this progress, a significant roadblock remains – running these LLMs requires high-end GPUs with large memory - an investment beyond the reach of most users. Additionally, training the large version of these models from scratch requires thousands of GPUs in an industrial-grade data center and tens of millions of dollars of investment.

Now, imagine the radical evolution that could occur if our everyday computers' idle processing power was harnessed into a massive, collective AI supercomputer. A distributed computer enabling anyone, anywhere, to run and fine-tune advanced AI models. This vision is much closer to reality today than just six months ago --it's precisely what PETALS has partly accomplished with their latest framework. It brings to mind the era of Napster and BitTorrent, but there's a crucial distinction: this endeavor is legal, open-source, and for the collective benefit.

Just as a torrent disperses file sharing across a network of users, PETALS segments each model into manageable blocks, spreading them across consumer-grade computers worldwide. This enables even modest devices with entry-level GPUs to contribute to the network, thus facilitating the execution of the largest AI models without cutting-edge infrastructure.

At this early stage, the technology is still limited to inference and fine-tuning a handful of LLMs. It doesn't yet allow the complete model training from scratch, and the inference performance is still below what's achievable with dedicated servers. However, it's a significant validation of the approach and a great showcase of the potential of what such a system could become in the near future.

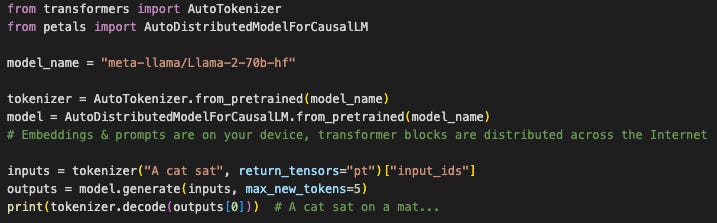

PETALS' other remarkable accomplishment lies in its user-friendliness. Despite its complex, distributed architecture, using it is as straightforward as interacting with a centralized API like OpenAI. Here's a code example loading the largest new Llama 2 model and then using it to complete a short sentence:

Revolutionizing AI with Blockchain

Stepping into the next challenge, the successful operation of this distributed system hinges on a simple yet crucial factor: the willingness of individuals to donate their idle CPU/GPU processing power and to keep their machines running and connected to high-speed Internet around the clock. A certain level of mutual benefit exists — those who lend their resources can also use the collective AI supercomputer when needed.

However, not everyone with robust hardware needs to run or fine-tune AI models. Consider the gamers whose high-performance machines optimized for high resolution, high frame rate play are dormant outside the gaming sessions. The potential could extend even further if platforms like PlayStation 5 and Xbox Series X allowed the integration of such a system.

GPU, Bandwidth, and Electricity: Why Give Them Away?

With its peer-to-peer sharing, BitTorrent provided a naturally balanced supply and demand network, sustained by the regular exchange of stored media files and bandwidth. Yet, this balance can't be assumed for an AI inference and fine-tuning distributed system, where the incentives for contributors and users may not organically align. This is where introducing a new mechanism — a blockchain and crypto token model — could marry perfectly with the structure PETALS has established. Imagine the appeal of earning crypto tokens – let's use the imaginary name 'NeuraCoin' — in exchange for providing GPU processing power, bandwidth, and electricity, essentially creating a new form of mining that doesn't suffer from the inefficiency and waste of Proof of Work systems. This system would allow users to employ these tokens to access the collective supercomputer or exchange them for other coins or fiat currencies. Users can also have the choice to either directly purchase ''NeuraCoins' to start using the supercomputer immediately or earn them over time by providing access to their idle processing capacity. Such a system does not require a brand-new Blockchain network; it can be built on top of existing ones like Cardano or Solana.

This kind of ecosystem's dynamics can lead to a more balanced demand and supply, provided certain conditions are met. A higher demand for AI computational resources could, theoretically, motivate individuals with idle processing power to contribute these resources in exchange for 'NeuraCoins,' thereby expanding the network's computational resources. On the other hand, an increase in supply could, under ideal conditions, lead to a decrease in the cost of computational resources due to competition among contributors. This dynamic could potentially make the AI-distributed supercomputer more accessible to users who need it.

The key factors influencing an individual's incentive to contribute to such a system primarily revolve around comparing Internet bandwidth and electricity costs against the potential earnings from tokens and their respective market value. This equation predominantly depends on geographical factors. A possible solution could be integrating these considerations into the token economy. For instance, users who contribute their processing power from regions with higher operational costs would receive a more significant token compensation. However, such as setup would effectively cause those utilizing the distributed capacity to pay a premium for accessing nodes from higher-cost locations. To optimize this dynamic, an algorithm could be implemented that initially allocates the most cost-effective nodes and incrementally taps into the pricier ones as network capacity reaches saturation. Such a prioritization mechanism could help create a more equitable, efficient, and scalable distributed AI ecosystem.

Huggingface, the popular hub for hosting and running AI models, may be ideally positioned to architect that kind of system. That is, provided it doesn't conflict with their prevailing centralized business model.

Envisioning such a thrilling and ambitious AI endeavor would be incomplete without contemplating the possibility of a doomsday scenario.

So, what could go wrong?

One of the most positive pieces I read about the topic is ‘Why AI Will Save the World’ by Marc Andreessen. Although I agree with many of the optimistic premises of the article, it is bordering on utopian idealism and needs a pinch of reality for balance. During an interview, Marc casually dismisses the threats posed by AI's potential dominion, assuming it would have to design and fabricate its own GPUs and servers independently.

The reality is that there's already plenty of cloud computing capacity accessible through APIs. It could allow an AI, via a few lines of generated code, to remotely instantiate virtual machines, transfer data, and ultimately replicate itself in a quasi-organic manner. But, given that cloud infrastructure is controlled by central private corporations with dedicated cybersecurity teams, one could reasonably assume that the risk is still relatively containable.

On the other hand, let's consider the implications of constructing a vast, massively distributed computer system. Imagine countless machines of disparate security levels scattered across the globe, all linked together. We may unintentionally open Pandora's box for the ideal hardware platform that could enable the execution and spread of malicious AI code across the globe like a digital wildfire impossible to control.

In my view, this risk isn't merely hypothetical—it's a looming shadow we must take into account as we blueprint the architecture and inter-workings of such a supercomputer. It should be equipped with some sort of ‘red kill switch.’

It is a challenging feat to achieve, given the decentralized nature of the network, yet absolutely essential. Additionally, many governance questions remain open beyond the technical aspect: Who gets to press the emergency button? How many people should have this power? How do we pick them? What are the triggers for a 'shutdown decision'?

Final Thoughts

To close on an uplifting note. I still prefer to envision a world where we are not dependent on a handful of large centralized and closed AI providers, a world where our devices don't merely sit idle, awaiting our commands, but actively contribute to a massive global decentralized AI supercomputer. Every self-driving car at rest or hooked to a charging station, every gaming PC and console during its off-hours, every office desktop machine at night - all could potentially be powering beneficial AI operations, becoming integral to the fabric of our digital future.

Still, it is critical to seriously consider the risks and design protective mechanisms from the early versions of such a network. Therefore, we must deeply reflect on: How can we strike the delicate balance of aiming at decentralized operations while holding onto the thread of safety and control?

Additional Reading

Meet Petals: AI That Can Run 100B+ Language Models At Home | Aneesh Tickoo

Petals: Collaborative Inference and Fine-tuning of Large Models | BigScience

Petals on GitHub | BigScience

Videos

Torrent for Running Huge GPT-LLMs | Prompt Engineering